Introduction

In our first post in this series, “Your Data Is Listening — And Watching”, we explained how your daily digital footprint—every click, swipe, or voice command—gets logged and stitched into an invisible profile that algorithms use to influence everything you see.

In our second post, “Cambridge Analytica Rebranded”, we peeled back the curtain on how those same psychological targeting tools didn't vanish—they just reappeared under new names/new coat of paint, quietly shaping opinions behind a friendlier company veneer.

Now, in this, our third post in the series, we’re fast-forwarding to 2025—where profiling isn’t reactive or static. It’s real-time, relentless, and happening whether you notice it or not.

Forget surveys or “take this quiz”—today’s AI watches how you speak, move, and even breathe, then clusters that info into a profile before you can blink.

In this post we'll explain how your devices—your phone, smartwatch, smart car, and even house IOT sensors—are all “phoning home,” feeding AI systems that build emotional and behavioural snapshots of you.

We’ll map the journey from old-school psychometrics to always-on surveillance, walk through how your data becomes someone’s ad strategy or mental health nudges, and dig into why that should freak you out—or at least make you ask for your rights back.

How AI Builds You: From Manual Profiling to Real-Time AI Insights

Imagine the difference between sitting in a quiet room, filling out a personality test, and going about your day while invisible AI quietly pieces together your emotional landscape. That’s the gulf between old-school CA profiling and today’s real-time AI profiling.

Manual CA Profiling: Solid, but Old-School

Back in the day, profiling meant answering questionnaires or standardised tests. You consciously report how you feel or what you prefer. It’s procedural and explicit, but slow and stuck in static categories—think MBTI (Myers Briggs Type Indicator), demographics, or psychometric buckets.

Real-Time AI Profiling: Always On, Always Learning

Fast forward to 2025. AI isn’t waiting for your answers anymore—why would it, it doesn't need to ask for permission, it’s observing you on device. Through smartphone apps, wearables, connected cars, smart home gizmos, and even the software you interact with, AI is collecting a continuous stream of your behavioural signals:

- Wearables & physiological cues: Smartwatches and wristbands don’t just track steps or heartbeat—they can infer stress or anxiety in real time by monitoring skin conductance or heart rate variability.

- Multimodal emotion sensing: AI frameworks like IoT‑ConvNet process sensors, expressions, speech, and gestures through deep learning to detect emotional states across environments like smart homes or healthcare.

- App and device telemetry: Ever noticed how your phone or navigation system seems to “phone home”? Telemetry collects data on your habits—from when you open an app to how long you stare at a screen. This data fuels AI models that spot patterns at scale.

- IoT systems everywhere: Your connected fridge, home sensors, or even the car's audio system, are constantly generating data. AI analyzes all these signals—sometimes inferencing mood or behavior without you even realising it.

With so much data streaming—from devices, apps, infrastructure AI can:

- Detect emotion in real time (e.g., joy, stress, anger) without self-reporting

- Cluster users dynamically based on behaviour, time of use, mood patterns, and usage contexts—not fixed typologies.

- Scale massively and instantly—profiling groups of people on the fly as data pours in.

From Data Collection to Commercial Targeting

So what happens once AI has grouped you into a cluster? Simple: businesses—and plenty of other players—are lining up to buy and use that information. Suddenly, the ads you see aren’t just based on what you searched or bought, but on when you’re distracted, relaxed, anxious, or primed to spend.

How it works (generally speaking):

- Your phone, smartwatch, or even your smart car picks up micro behaviours.

- AI clusters you with similar patterns—“late-night browsers,” “stressed commuters,” “enthusiastic weekend exercisers.”

- Those clusters are sold or leveraged across marketing systems to tailor offers, ads, or even influence you.

All of this happens quietly in the background—no forms, no consent, just invisible AI constantly building, tweaking, and monetising your profile. And you don’t earn a cent. In fact, you probably paid for the privilege—only to have your own data packaged up and sold back to you.

Ethical & Privacy Risks

It’s hard to ignore the darker side of invisible profiling. As AI gets smarter and more independent, the privacy worries keep stacking up. Take agentic AI—the kind that runs with little human oversight. Sounds efficient, sure—but when it gets it wrong, who’s actually accountable?

Want to know who’s to blame when AI goes off the rails? Keep an eye on this case (link below)—it’ll show just how much (or how little) Big Tech will actually be held to account.

Sadly, I’m already calling it: a “thoughts and prayers” outcome at best. Once the T&Cs get waved around by high-priced lawyers, expect the blame to shift—service providers painted as victims, and parents demonised as “bad parents.”

August 26th 2025

The Stanford 2025 AI Index Report (below) found a 56% jump in AI-related privacy and security incidents in a single year.

Most of those were data breaches or flat-out misuse. In other words, the very systems profiling us in real time aren’t just busy watching—they’re also pretty exposed to bad actors.

Regulation & Governance Landscape

guardrails are finally being bolted on. In Europe, the EU’s AI Act rolled out in August 2024. It bans the worst stuff—like real-time biometric ID and social scoring—and forces extra transparency when it comes to high-risk AI systems.

The UK followed with its Data (Use and Access) Act 2025, basically saying: “Okay, AI can make some automated decisions, but only if humans can step in, you’ve got the right to appeal, and the process isn’t a total black box.”

And closer to home, Australia’s government has been pushing back against the likes of Meta, signalling that tougher privacy reforms are on the way—this time written with the AI era in mind.

Conclusion

The thing about AI companies? They’re in an all-out sprint—hoovering up data and scrambling to beat the competition. But in that mad dash, something always gets trashed: your rights, your privacy, and, bit by bit, the social fabric we all rely on.

But with that power comes a responsibility that’s too often missing: where’s the consent?

The oversight? The fairness? This isn’t just about slicker marketing or endless consumerism—it’s about protecting autonomy in a world where the invisible commercial gaze never blinks.

Don’t just take my word for it—look at the real toll social media has taken on young people. Studies and internal TikTok documents reveal (READ MORE HERE) that the app can become addictive in as little as 35 minutes, and that excessive usage is tied to cognitive issues like anxiety, memory loss, and diminished empathy.

Even more chilling, unsealed internal videos show TikTok staffers raising concerns about the algorithm pushing disordered-eating content to teens, saying some users feel they “never want to leave.” And in France, seven grieving families have filed a lawsuit claiming TikTok’s algorithm exposed their children to harmful, self-harm content—contributing to devastating outcomes.

These stories aren’t isolated. They're a warning: the race for growth and to embrace the new often tramples real human costs.

So here’s the question facing us now: Are we going to learn from this? Or will we keep running hard toward the future—only to end up spending the next era cleaning up the wreckage caused by unchecked AI profiling and corporate ambition?

Posts In This Series

POST 2

POST 1

Further Reading + Sources + References

The Impact of Social Media on the Mental Health of Adolescents and Young Adults: A Systematic Review - SOURCE

Khalaf AM, Alubied AA, Khalaf AM, Rifaey AA. The Impact of Social Media on the Mental Health of Adolescents and Young Adults: A Systematic Review. Cureus. 2023 Aug 5;15(8):e42990. doi: 10.7759/cureus.42990. PMID: 37671234; PMCID: PMC10476631.

TikTok knew depth of app’s risks to children, court document alleges - SOURCE

Reuters: Google, Character.AI must face lawsuit over teen’s death - LINK

Reuters: Facebook must face DC attorney general’s lawsuit tied to Cambridge Analytica scandal - LINK

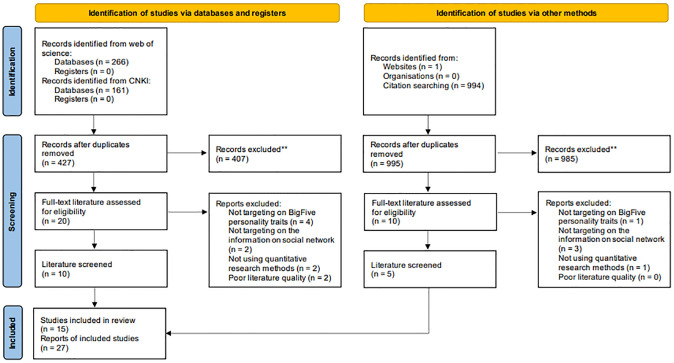

👉️ Social Media and the Big Five: A Review of Literature - LINK

👉️ Social profiling through image understanding: Personality inference using convolutional neural networks - LINK

✅ Want to learn how to update your privacy settings?

Naomi Brockwell’s NBTV YouTube channel features great explainers that are clear and easy to understand.

Want to dig a little deeper into the dark side of tech? Don’t worry—not tinfoil-hat deep, just enough to actually understand what’s going on.

Member discussion