🗂️ About This Series

This is the first post in our six-part series on how personal data has been weaponised—from Cambridge Analytica to the AI-driven surveillance systems quietly profiling you today.

We’ll explore how these tools work, who’s using them, what they’re doing with your data—and what you can do to push back.

Let’s start at the beginning, with the case that blew the lid off: Cambridge Analytica.

We know this isn’t a post about residential construction or the building industry. And yes—we’ve got plenty more of those coming soon.

But we also feel it’s important to pause and talk about something bigger. Because the way technology—especially AI, machine learning, and big data—is being used today will shape how we live, work, and plan for the future.

This series isn’t a rant. It’s a reality check. A look back at how companies have already used personal data in deeply manipulative ways—and a warning about how those same patterns are being dressed up with new names and slicker branding.

Personally, I think AI is great. Some of the tools out there are genuinely incredible. But like the tech boom of the early 2000s, there’s also a mountain of hype, smoke, and self-serving noise—mostly coming from the same Silicon Valley firms that love to sell visions more than they deliver value.

Take Roblox, for example. Built as a virtual playground, it’s now facing lawsuits and international backlash. Critics accuse the company of prioritising user growth, engagement, and profits over the protection of children, even as vulnerabilities have emerged—predators using voice-altering software, simulated sexual content, and more.

Roblox isn’t alone—but it’s emblematic. Platforms thriving on engagement-at-all-cost create incentives that can drown out user safety.

Silicon Valley used to run on a pay-it-forward culture. These days, it’s more about pay-to-play. That shift matters—and it’s worth paying attention to.

Introduction

You probably suspect your phone is listening. (BTW: It is—and that’s recently been proven - READ ABOUT IT HERE).

But what if every single interaction—from customer calls to social media use—is logged, analysed, and added to a robust AI-assembled profile?

Today, these profiles are pulled together with our unique device IDs, cross-referenced and networked.

This isn’t the minority report either—it’s a reality already happening. Remember Cambridge Analytica, the political consultancy firm that harvested data from up to 87 million Facebook users and turned it into predictive personality scores.

Critics and whistle blowers called it a psychological weapon, using personal data to sway elections. That scandal shocked the world—yet it barely scratched the surface of what today’s AI tools can do.

But emerging technologies and heightened awareness give us a chance to push back. We can learn from the past to protect the future—by adopting privacy-preserving tools, demanding rights to non-profiling, and pushing for stronger regulation. The question is: will we act before the weapon becomes too precise to resist?

Cambridge Analytica as a “Data WMD”

So WTF exactly happened?

In the early 2010s, a Facebook app named “This Is Your Digital Life” harvested data not just from people who downloaded it, but from their friends too—without their knowledge. That expanded the dataset immensely.

Experts estimate they gathered information on up to 87 million Facebook users, including their likes, friends, and interests.

Using that data, the firm Cambridge Analytica created detailed personality profiles—called psychographic profiles—based on the Big Five traits (like openness, conscientiousness, introversion, etc.). Instead of grouping people by age or location (demographics), they segmented by behaviours and psychological traits. That’s what makes it powerful—and dangerous.

Unlike demographics—which capture obvious facts such as age or location—psychographics digs deeper into your mindset and motivations. Marketers and firms collect this information through surveys, social media behavior, and digital footprints.

When combined with lots of user data (like Facebook likes or browsing habits), companies like Cambridge Analytica used psychographic profiles to predict—and even try to influence—the way you think or vote. This is why critics labeled it a real-life “data weapon.

Why call it a “Data WMD”?

The whistle blower Christopher Wylie and others labelled this approach psychological warfare. By knowing someone’s personality, CA could craft personalised messages designed to emotionally influence and sway decisions—especially during the 2016 U.S. elections and Brexit campaigns.

In the words of Wylie: they could determine "what kind of messaging you’d be susceptible to" and push you toward a specific outcome.

So, what were the consequences?

Cambridge Analytica didn’t survive the fallout. After global outrage and mounting legal pressure, the company folded in May 2018, filing for bankruptcy. It was a textbook example of a business built on shady data practices finally crashing under the weight of public scrutiny.

Facebook—now rebranded as Meta—didn’t escape unscathed either. It faced a $5 billion fine from the U.S. Federal Trade Commission, which, at the time, was one of the largest penalties ever handed down for privacy violations. On top of that, it settled a separate class action with U.S. users for $725 million. Other regulators in places like the UK and Australia also imposed penalties for the company’s failure to protect user data.

And it’s not entirely in the rear view mirror either. In 2025, a court in Washington, D.C., revived a long-running lawsuit accusing Meta of misleading consumers in relation to the Cambridge Analytica scandal—proof that data misuse has a long tail.

Facebook has agreed to an $8 billion settlement with shareholders, meaning Mark Zuckerberg, Marc Andreessen, Sheryl Sandberg, and other current and former executives will not be required to testify at trial. READ the France24 news article HERE

If you did nothing wrong, why settle? Most likely because they made far more revenue than this from the data explotation to begin with - and the fine is inconsequential to their money printing enterprise.

Morality and ethics classes surely are not taught in CompSci at Harvard apparently - or perhaps they were only taught later in the syllabus, after dropout had happened?

🧠 What this case teaches us

The biggest takeaway from the Cambridge Analytica scandal? Personal data is incredibly powerful—and dangerous—when mixed with behavioural science.

Cambridge Analytica might be gone, but the playbook they used hasn’t disappeared. In fact, it’s evolved. The tools they helped expose—like psychographic targeting, which once relied on manual analysis—can now be done automatically with AI. And not just at scale, but in real time.

What’s more unsettling is that many of the same people behind the original operation didn’t fade away. They resurfaced in new companies, under new names, continuing the same kind of work. The machinery didn’t stop—it just got re-branded and kept moving.

Conclusion

The story of Cambridge Analytica isn’t history - its still around but with a new appearance and "powdered by AI". The same tactics are still in use, just harder to detect, faster to deploy, and powered by AI instead of spreadsheets.

If we learnt anything from this scandal, it’s this: your data is valuable because it reveals who you are—and that makes it powerful.

In the next post in the series, we’ll look at what happened after Cambridge Analytica collapsed—and how the same people and playbook just moved on under new names.

24-08-2025 Update

Just released, The AI Safety Index by:

Further Reading + Sources + References

Reuters: Google, Character.AI must face lawsuit over teen’s death - LINK

Reuters: Facebook must face DC attorney general’s lawsuit tied to Cambridge Analytica scandal - LINK

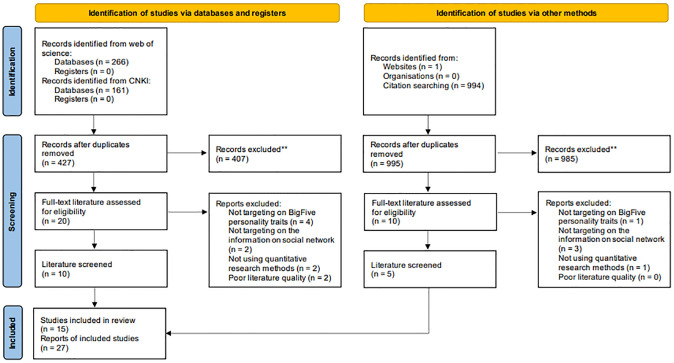

👉️ Social Media and the Big Five: A Review of Literature - LINK

👉️ Social profiling through image understanding: Personality inference using convolutional neural networks - LINK

✅ Want to learn how to update your privacy settings?

Naomi Brockwell’s NBTV YouTube channel features great explainers that are clear and easy to understand.

Member discussion