Introduction

Remember that feeling that your phone is listening to you?

Well, in this series, we've shown it's not just listening—it's watching your every click, swipe, and pause, turning your life into a digital profile.

To put it simply, we've been discussing in the last 5 posts how your personal information gets collected and used by powerful AI systems.

Here's what we've covered so far:

- How your data is collected from everyday apps and websites.

- How companies use that data to target you with ads and content.

- How AI can now guess what you'll do next before you even do it.

- How this "personalisation" can sometimes be manipulation, especially for teenagers.

Just as people are waking up to these problems and asking for new rules to protect us, the big tech companies are fighting back—hard.

👉️ Recently (September 2025), Meta (the company that owns Facebook and Instagram) started a political group with tens of millions of dollars (2nd link here).

Its main goal? To fight against new laws that would regulate AI and protect your data. They're spending a fortune to make sure they can keep operating with as few rules as possible.

On one side, you have people asking for safety and privacy. On the other, you have companies spending huge amounts of money to avoid new regulations.

The good news is, things are starting to change. In Europe, new laws are banning the most manipulative AI. Here in Australia, a new law is coming to help keep younger kids off social media. But we can't rely on governments alone. They're too slow and don't break things.

In this post we're going to show you exactly what you can do to protect yourself, what businesses should be doing, and what we should all be demanding from our leaders.

We'll highlight what we think is a baseline for a "Digital Bill of Rights" that you can use to start conversations and push for change.

🛡️ The Big Problem: Rules Are Lagging Behind

So, we have all these amazing, powerful technologies. But here’s the core issue: the rules to keep them in check are way behind.

It's like we've built race cars before we've finished building the seatbelts and traffic lights.

The world is struggling to create a clear, strong set of rules that can protect people everywhere. This creates a patchwork of different laws in different countries, which means the harms from data misuse can travel across the internet in seconds, while the solutions get stuck arguing at the border.

Here’s what’s starting to change:

- Europe is Drawing Lines: The EU has started putting its new AI rules into effect. They've outright banned some of the scariest AI practices, like systems that manipulate you when you're vulnerable or that try to guess your emotions in your workplace or school. This sets a really important example for the rest of the world.

- Australia is Taking Steps: We're making progress too! The government has started updating our privacy laws, with more changes coming. We're also getting a new social media minimum age law next year, which will force platforms to actually check how old their users are. Let's see how effective this is. An "old man" mask for $20 can fool the AI selfie verification so im not holding my breathe on this one working.

- But There's Pushback: As we mentioned, tech companies are spending huge amounts of money on lobbying. Their goal is to soften new rules and make sure regulations don't get too strict.

The Big Picture: "Data Colonialism"

Some much smarter people than I call what's happening "data colonialism."

It's the idea that big tech companies are treating our personal lives and experiences as a new type of raw material to be mined—just like historical colonies mined for gold or cotton.

Our personal information is extracted, sent to data centres overseas, and turned into profit for shareholders, often without us seeing much benefit in return. This is why having our own strong Australian rules isn't just about privacy—it's about maintaining control and making sure we benefit from our own digital lives.

🔮 What's Coming Next: The Big Shifts for 2025-2026

Okay, so we know the rules are playing catch-up. But what does that actually look like over the next year or two?

1. Bans with Real Teeth.

Europe is outlawing the worst AI abuses. This is a game-changer. When a market that big says "no" to manipulative AI, it forces global companies to change their products for everyone. We'll likely see other countries, including Australia, copy and paste these rules.

2. Putting Kids' Safety First.

Australia's new social media age law is a good start. It's not just about keeping under-16s off platforms; it's about forcing a fundamental shift. Platforms will now have to build "age assurance" tech and default to safer settings for young people. This will reshape how social media works for kids worldwide.

3. The Chess Match: States vs. The Feds.

In the U.S., there was a proposal to stop individual states from making their own AI laws for ten years. But the U.S. Senate rejected it in a near-unanimous vote. Why does this matter to us? It means there will be a "patchwork" of laws across America. This chaos will push companies to just adopt the strictest rules (often from California) as their global standard, which can end up protecting users everywhere.

4. "Show Your Work" Becomes Mandatory.

Get ready to hear a lot about "ISO 42001" and "NIST" National Institute Of Standards and technology (USA). These are new international standards for managing AI. Soon, it won't be enough for a company to say "trust us." Boards, auditors, and insurers will demand they prove they're managing their AI risks properly. It's about moving from empty promises to provable safety.

5. No Hitting the Pause Button.

Despite some calls to slow down, the EU is sticking to its timeline. The rules are coming, and businesses need to get ready now. There's no more time to wait and see.

But the real question is: does this feel like enough to you? The "protect the kids" laws are a start, but they feel rushed and slow at the same time.

While we're carefully trying not to break any eggshells, the tech itself is already breaking things. Maybe it's time we started moving at the speed of the problem.

🚀 What You Can Actually Do About It

We've talked about the problems and the new rules. Now, let's get down to what you, your business, and our government can do right now.

A) For You and Your Family (Starting Today)

Think of it like digital home security. Start by locking down the basics: try using a more private browser like Firefox or Brave, turn on the setting that blocks third-party cookies, and get into the habit of cleaning up your app permissions every few months. Go through your phone and ask, "Does this game really need access to my contacts?"

Next, start asking questions. If an app you use has AI features, don't be shy. Ask them what data was used to train it, where your information is stored, and how you can delete your records if you want to. Demanding transparency is your right.

For families, this is especially important. Use the phone-level controls to limit screen time and app downloads. Most importantly, talk openly with your kids about how their social media feeds work—that they're not a neutral window to the world, but a filtered, algorithmic one designed to keep them scrolling.

B) For Businesses (Your To-Do List This Quarter)

If you're in a business using or developing AI, the time for vague promises is over. Your first step is to adopt a proper framework. This sounds hard, but it's just a structured way to manage your AI. Your insurance company is going to start demanding this!

The globally recognised standards are called ISO/IEC 42001 and the NIST AI RMF. Start simple: make a list of what AI models you use, where your data flows, who your vendors are, and what the potential risks are.

A key principle to live by now is data minimisation. This means collecting only the data you absolutely need and not holding onto it forever. Before launching any new AI feature, run a privacy impact assessment—it's like a safety check to spot problems before they happen. This will directly prepare you for Australia's upcoming privacy reforms.

Make safety a default, not an option. For teen users, this means turning off targeted ads and disabling any "emotion inference" features. If your product is used by young people, the safest settings should be the automatic starting point.

Finally, be explainable. People should know when they're interacting with AI. Provide clear signals when content is AI-generated and be ready to explain, in simple terms, how your models make decisions.

C) For Our Government (The 2026 Agenda)

The most critical task is to finish the Privacy Act overhaul, strengthening our rights to access and delete our data and adding a direct right to action if it's misused.

We need to codify clear red lines, just like the EU has. This means writing into law that manipulative and exploitative AI is banned, that biometric categorisation (like sorting people by sensitive traits) is reined in, and that emotion inference is restricted in schools and workplaces.

The new kids' safety law is a start, but it can't be the end. The government must pair it with real funding for youth mental-health research and independent evaluations of the age-assurance tech itself. We need annual reports on its actual impact, not just a "set and forget" law.

👉️ And finally, in light of the massive lobbying efforts we're seeing, we need sunlight. The government should require public disclosure of AI-policy lobbying and significant model-risk incidents. The public has a right to know who is shaping the rules and what's going wrong.

Even better - ban all political lobbying!

👉️ Voters elect politicians to serve the public. Lobbyists redirect them to serve the highest bidder.

This is the great subversion: the power we grant to serve the public is being auctioned to the highest bidder.

👉️ Lobbying, in its current form, institutionalises conflict. It manipulates outcomes away from the public good and toward private profit. We must dismantle this conflict by forcing all influence into the sunlight, ensuring policy is made for the public, not for purchase.

There are currently 721 registered lobbyists in Australia - see the list HERE

We need a fundamental shift in accountability. Perhaps it's time for a simple, stark principle: "You cause catastrophic harm, you do time."

This means board of directors and C Suite of large companies.

Consider the current calculus: a company illegally fires 289 staff because the profit for shareholders (cost/benefit) outweighs the potential fine. Or a telco with a mandate to provide emergency 000 services suffers a preventable outage that leads to multiple deaths.

This isn't just a cost of doing business; this is gross negligence. Under Work Health and Safety laws, company officers can face fines in the hundreds of thousands and years in prison for failing their duty of care. Where are the consequences here?

👉️ The equation is simple: No real consequences = no meaningful change. Capitalism is brilliant at calculating cost-benefit analyses to maximise shareholder value. When the "cost" of endangering public safety is just a manageable fine, it becomes a line item—not a deterrent. Public safety will never be a priority until the personal cost to decision-makers is unacceptably high.

The O_A_I_C must be empowered with sharper teeth and the mandate to use them. Today, accountability is an illusion—a game where the bar to prove a breach is set higher than the bar to commit one. When the penalty for breaking the law is less than the profit gained, the law itself becomes a suggestion.

You: Tidy up your app permissions and start asking companies tough questions.

Businesses: Adopt a safety framework (ISO/NIST), collect less data, and design for data security from the start - not after your hacked.

Government: Finish the privacy reforms, ban manipulative AI, and fully fund the new safety laws.

✊ A Digital Bill of Rights: What We All Deserve

So, what does a future where technology serves people actually look like?

Here is what a Digital Bill of Rights should guarantee:

📜 A. The Principles of Control and Consent

- Explicit, Opt-In Permission: The era of assumed consent is over. The default for any data collection must be OFF. Companies cannot collect, use, or share any data—including background telemetry and diagnostics—without first obtaining our explicit, informed, and unambiguous opt-in consent. No more pre-ticked boxes or dark patterns.

- The Right to Understand: All terms must be in plain English. Legal documents must be short, readable, and use layered summaries (a simple headline, a short summary, full details) so a reasonable person can understand what they're agreeing to in under a minute. If it’s not understandable, it’s not enforceable.

- No More Manipulation: Dark patterns are banned. Consent must be freely given, without deceptive design tricks, nudges, or interfaces that confuse or pressure us into saying yes. Saying ‘no’ must be as easy as saying ‘yes’.

📜 B. The Principles of Transparency and Fairness

- Radical Transparency: Companies must clearly disclose all commercial relationships and exactly what data is collected before gaining permission. This includes all background telemetry and data collection, which must be disclosed and turned off by default.

- Data Minimisation: Companies can only collect data that is strictly necessary for a clearly stated purpose. They can’t just hoard everything on the chance it might be useful later. If they don’t need it for the core function of their service, they can’t take it.

- The User Dividend: If a company profits from our data after we’ve opted in, we get a share. The mechanism for this profit-sharing must be transparently disclosed upfront. Our data creates their wealth; it’s time for a fair share.

- No Secret Scraping: Scraping data from non-sanctioned sources is theft, full stop. This is especially critical for sensitive biometric and facial recognition data, which must be off-limits without direct, specific consent.

📜 C. The Principles of Ownership and Agency

- You Buy, You Own: When you purchase software, a digital movie, an ebook, or a smart device, you are buying a product, not licensing a temporary, revocable access pass. The "you will own nothing" model is unacceptable. Companies cannot lock essential functionality behind ongoing subscriptions, remotely disable features, or render your property useless. The right to repair, modify, and use what you've purchased indefinitely is fundamental.

- Your Data, Your Choice: We must have a real right to access, portability, and deletion. This means the ability to easily download all our data in a usable format, take it to a competitor, and have it completely and verifiably deleted—fast, free, and without hassle.

- No Retaliation: Companies cannot punish us for exercising these rights. They cannot degrade service, charge fees, or deny access to core features if we choose to opt out of data sharing or modify the products we own. The choice must be truly free.

- The Right to Audit: For high-risk AI and data systems, companies must submit to independent, external audits. We have a right to know how these "black box" systems are making decisions that affect our lives, from loan applications to job recruitment.

📜 D. The Principles of Safety and Accountability

- Strict Boundaries: No cross-context tracking without fresh consent. Data collected for one purpose (e.g., your health data in a fitness app) cannot be used for another (e.g., targeted advertising) without asking you all over again in a clear and specific way.

- Child-First Design: Services used by children must have stricter defaults and limitations on data use by design, prioritising their well being over profit. Their data deserves the highest level of protection.

- Security by Default: Companies must implement strong baseline security controls and are obligated to disclose data breaches promptly and clearly so we can protect ourselves. You can’t collect our data without also being responsible for protecting it.

- Pay Your Way: If a company generates substantial revenue from users in a country, it must pay taxes in that country. Profits generated from Australians should contribute to Australian society, helping to fund the services and infrastructure that support our digital lives.

- Unavoidable Consequences: Breaches of these rights must result in prohibitive, automatic fines based on a company's global revenue—a significant percentage, not a paltry fee. This makes violation a catastrophic business decision, not a calculated risk. The wrist-slaps are over.

🏁 You Have More Power Than You Think

AI isn't going away. And neither is the powerful incentive for companies to collect our data, take our secrets, and nudge our behaviour.

The thing is: we are not powerless.

The last year has proven that while Big Tech mobilises immense resources to shape the rules, lawmakers can be forced to listen.

Now, it's our turn to speak.

The most important takeaway from this entire series is this: you have the power to demand change.

👉️ Don't wait for someone else to act. If you are tired of your data being stolen and sold back to you, say something. Write to your local councillor. Email your state representative. Tell them enough is enough. Demand that they support and implement a Digital Bill of Rights. Use the technology for this purpose - to organise and to engage. Its never been easier to make your voice heard!

History shows us that change doesn't require a majority; it requires a committed minority.

If just 3% of the population makes a sustained effort, change becomes inevitable.

If you don't want a future of surveillance and techno-feudalism, the time to say something is not tomorrow—it is right now.

FAQs

1) Is the EU AI Act relevant to me in Australia?

Absolutely. When a market as big as the EU bans certain AI practices, global companies often apply those stricter rules everywhere. So, the EU's bans on manipulative AI are likely to become a de-facto global standard.

2) What exactly changes with Australia's social media age law?

Starting 10 December 2025, "age-restricted social media platforms" will be legally required to take "reasonable steps" to prevent children under 16 from creating accounts. The eSafety Commissioner has published guidance on what those steps should look like.

3) I heard Big Tech tried to stop regulation in the U.S. What happened?

A proposal was advanced that would have blocked individual U.S. states from making their own AI laws for a decade. However, the U.S. Senate removed this moratorium in a 99-1 vote, meaning states like California and Colorado are free to pioneer their own AI rules.

4) What's the first thing my company should do?

Start by adopting a framework. ISO/IEC 42001 (an AI management system) and the NIST AI RMF are the two most recognized. They help you inventory your AI, understand the risks, and build trust.

5) Do these steps help with Australia's privacy reforms?

Yes, directly. Practices like data minimisation (collecting only what you need), running privacy impact assessments, and being accountable align perfectly with the direction of Australia's upcoming Privacy Act reforms.

6) Does the new teen safety law cover gaming or chat apps?

The law targets "age-restricted social media platforms." The official guidance clarifies the scope, and regulators are focusing on services with messaging and community features, which could include some gaming and chat apps.

7) Is "emotion AI" actually banned anywhere?

Yes. The EU AI Act bans the use of emotion inference systems in workplaces and educational institutions. It also prohibits AI systems that exploit people's vulnerabilities. This sets a powerful legal precedent.

8) How can I personally push for this change?

You have a voice. Share this Digital Bill of Rights with your local MP. Ask your school to publish its policy on AI use. When a company tries to sell you software, ask them if they align with the NIST or ISO standards. Demand better.

Other Posts In This Series

POST 5

POST 4

POST 3

POST 1

Further Reading + Sources + References

Robot with a bat

From Instagram’s Toll on Teens to Un-moderated ‘Elite’ Users, Here’s a Break Down of the Wall Street Journal’s Facebook Revelations - LINK

TikTok knew depth of app’s risks to children, court document alleges - SOURCE

Reuters: Google, Character.AI must face lawsuit over teen’s death - LINK

Reuters: Facebook must face DC attorney general’s lawsuit tied to Cambridge Analytica scandal - LINK

Facebook has agreed to an $8 billion settlement with shareholders, meaning Mark Zuckerberg, Marc Andreessen, Sheryl Sandberg, and other current and former executives will not be required to testify at trial. READ the France24 news article HERE

If you did nothing wrong, why settle? Most likely because they made far more revenue than this from the data exploitation to begin with - and the fine is inconsequential to their money printing enterprise.

Morality and ethics classes surely are not taught in CSci at Harvard apparently - or perhaps they were only taught later in the syllabus, after dropout had happened?

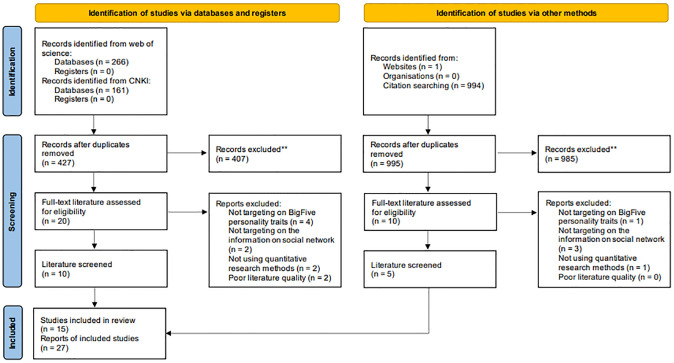

👉️ Social Media and the Big Five: A Review of Literature - LINK

👉️ Social profiling through image understanding: Personality inference using convolutional neural networks - LINK

✅ Want to learn how to update your privacy settings?

Naomi Brockwell’s NBTV YouTube channel features great explainers that are clear and easy to understand.

Or, if you’d like to dive a bit deeper into the tech side—without going overboard—check out this channel.

Member discussion