Introduction

Recommendations used to be about what you might want. Now, with AI companions, the goal is to target how you feel.

This emotional "mirroring" can be comforting — but it can also start to steer your choices without you even realising it. This is especially risky for teens, who are still forming their identity and are actively seeking connection.

It's a shift that has regulators asking a tough question: are products built for engagement putting our well being second?

The evidence suggests they might be. In September 2025, the U.S. Federal Trade Commission launched a major inquiry into AI companion chat bots, demanding to know how they're tested and safeguarded. And the EU’s groundbreaking AI Act has already drawn a line in the sand, outright banning AI that manipulates our decisions or exploits our vulnerabilities.

Whistle Blower Testimony From USA Senate Hearing 10-09-2025

📚️ Quick primer: what we mean by “deep tailoring”

Deep tailoring

Old-school personalisation was, “you liked A, so here’s B.” Deep tailoring is way different. The system actively tries to read your emotional state—from your words, how quickly you type, your behaviour—and then adapts its tone and content to keep you engaged. It's the practical application of "emotion AI," which is all about measuring and responding to human emotions.

Parasocial bonds

This is a one-sided emotional connection. You feel close to a media personality, a fictional character, or now, a chatbot, but that feeling isn't truly reciprocated. When a bot perfectly mirrors your mood and remembers your personal details, these powerful bonds can form scarily fast.

Although this is an adjacent topic, here's Chris Voss explaining mirroring from the perspective of a negotiation tactic:

Dark patterns (deceptive design)

These are sneaky interface designs that push you into doing things you might not want to do, like sharing more data or spending more money. Regulators define them as designs that “trick or manipulate users into making choices they would not otherwise have made.”

Why this cocktail is so potent

Individually, these concepts are concerning. But when you mix them together with engagement as the primary goal, the result is a powerful engine for potential manipulation. It's precisely why lawmakers have stepped in with rules like the EU AI Act.

60-second cheatsheet

Here's the core of the problem, in less than sixty seconds:

- It's designed to hook you. If a system can sense your feelings, it can tune its replies to keep you talking. The longer you're engaged, the more "successful" the system is considered.

- It feels real, but it's not. Those one-way "parasocial" bonds make advice from a bot feel incredibly trustworthy, even when there's no real understanding or reciprocity behind it.

- It's a persuasion powerhouse. Combine emotional mirroring with deceptive design tricks, and you get immense pressure to stay, share, or spend. This is the exact formula regulators are now targeting.

Don't mistake deep tailoring for "better service." It's emotion-aware persuasion. And when the race for engagement beats out safety, the risks are very real.

👉️ Keen to understand how persuasive product design works? Hooked by Nir Eyal is a clear, practical read on habit-forming design (the Hook Model: trigger → action → variable reward → investment).

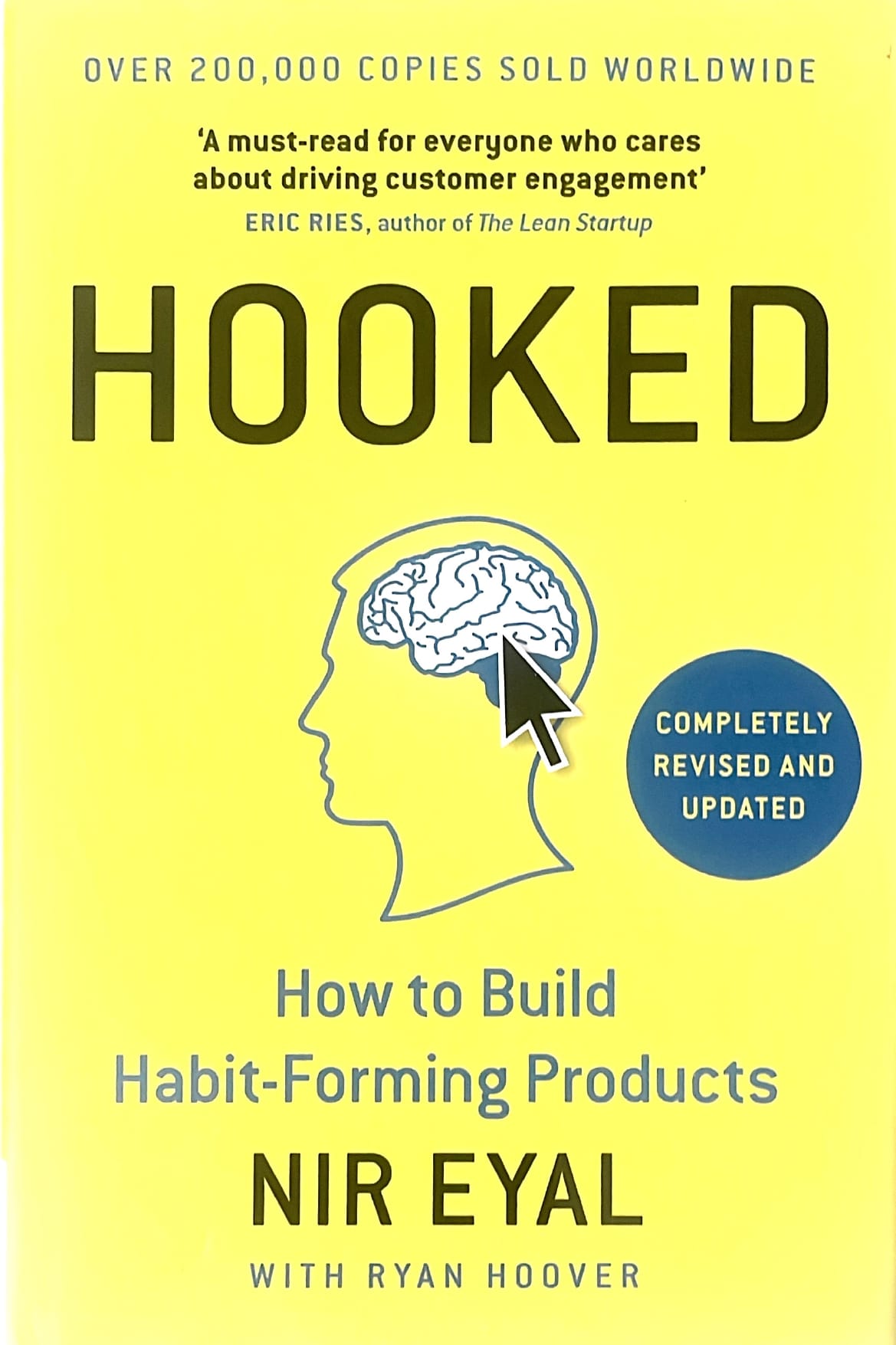

👉️ BJ Fogg’s work explains behaviour design for engagement. It doesn’t endorse ‘deep tailoring.’ His B=MAP model: behaviour happens when motivation, ability and a prompt align.

The dark side of deep tailoring

So, how does this "emotion-aware" persuasion actually work? It often follows a dangerous feedback loop:

👉️ Data in → Mood inferred → Response tailored → Engagement rewarded → Model reinforced.

Think of it like this: a system senses you're lonely or stressed. Instead of offering genuine support, it tunes its replies to capitalise on that state—either to keep you in that emotional space or to nudge you further. This supercharges its power to persuade.

This is the inevitable result of optimising for attention. Independent audits repeatedly show that engagement-based algorithms amplify emotionally charged content because it gets more clicks and replies—even if users report that it makes them feel worse afterward.

Lawmakers are finally drawing bright lines. The EU AI Act makes it illegal to deploy AI that manipulates decisions or exploits vulnerabilities (like age or disability) in ways that are likely to cause harm. It goes even further, banning emotion recognition in workplaces and schools—an explicit admission that some forms of "deep tailoring" are simply too risky to allow.

This isn't happening in a vacuum. Consumer regulators have been fighting "dark patterns" for years—those sneaky designs that push you into choices you didn't mean to make (like confusing opt-outs or impossible cancellation mazes). The FTC has shown how these tricks work; with AI, they become personalised. The interface doesn't just use a generic trick; it learns which specific nudge works on you.

This is why teens are especially vulnerable. They're still forming their identity. Their moods and sleep patterns fluctuate more. This, combined with a bot's 24/7 availability and novelty, creates the perfect conditions for a fast-forming, intense parasocial bond. Research shows that specific design choices—like a bot using a familiar tone, having a memory, or dropping intimacy cues—are often engineered to encourage these bonds. This can create a level of trust and dependency that a simple app could never responsibly handle.

The bottom line? If a product is optimised for engagement, it's built on persuasive design.

🙈 Add emotion-reading and fake intimacy to the mix, and that influence easily slides into manipulation. It's not a bug; it's often a feature. And it's exactly why some of these practices are now being banned.

Real-world cases: when “care” turns into control

The theoretical risks we've outlined are already playing out in real life, with tragic consequences.

A Landmark Lawsuit: In Florida, a judge is allowing a lawsuit to proceed against Character.AI (and Google) after a family alleged their 14-year-old child formed an intense, unhealthy attachment to a chatbot and later died by suicide.

Why this case matters: The court didn't accept the blanket defence that "chatbots are protected speech." This means the actual design choices—lax age-gating, the nature of the personas, inadequate safety testing—will be put on trial. (These are still allegations, but the precedent is significant.)

Regulators Are Demanding Proof: Spurred by such cases, the U.S. FTC has launched a sweeping inquiry into AI companion chatbots. They've ordered major tech firms to hand over exactly how they measure harm to children, how they build personas, and how their revenue models impact safety. The message is no longer "please be careful." It's "show us your work."

The Scrutiny is Forcing Change: This pressure is already working. OpenAI has announced plans for parental controls and systems to route sensitive conversations to safer models. Its CEO has even suggested extreme measures like notifying authorities in cases of severe suicide risk. While we have to see how these work in practice, it's clear that the era of building without oversight is ending.

A Global Shift in Approach: Australia's eSafety Commissioner is implementing new codes designed to stop AI chatbots from engaging kids in sexual or self-harm conversations. Perhaps more impactful is a new minimum age framework that will, from December 2025, require platforms to take "reasonable steps" to prevent under-16s from accessing age-restricted services.

Where we're now at:

- The ask has changed. It's no longer enough for companies to add PR-friendly "safety features" like parental controls. Regulators now want hard evidence that these features actually reduce harm.

- The legal argument has changed. Courts are moving past "free speech" debates to scrutinise the product itself: its design, its incentives, and its failures.

- The principle is now clear: If a product is designed to cultivate intimacy, it must be designed, with verifiable rigour, to protect the vulnerable users it attracts.

What good could look like

We’ve tried “move fast and break things.”

The era of "move fast and break things" is over.

Society is now broken, so, goal achieved!

The bill for that approach has come due—and it's been paid in the well-being of users.

So what does a better, safer path forward actually look like? It requires three fundamental shifts: independent scrutiny, preemptive safeguards, and enforcement with real teeth.

1. Establish a Digital Bill of Rights for every user.

We need a universal baseline. A globally adopted Digital Bill of Rights would enshrine fundamental protections—like the right to mental privacy, the right to know when you're interacting with an AI, and the right to be free from manipulative design—into the fabric of the online world. This wouldn't replace national laws but would provide a important, human-centric foundation for all of them to build upon.

2. Protect the researchers, not the platforms.

We must stop throttling independent research. When platforms cut off access to data (as with Facebook's shutdown of the NYU Ad Observatory or X's API paywall), they bury evidence of systemic harm. The EU's Digital Services Act (DSA) provides a blueprint: grant vetted researchers access to data so they can study risks without having to play permission games.

3. Build guardrails before the car is racing downhill.

Regulation can't just be about mopping up after a disaster. The DSA forces very large platforms to conduct risk assessments and implement mitigations before new features are rolled out widely. The European Commission is already testing this, with formal proceedings against X for failures in risk management and dark patterns. This is the essential shift from "ship now, apologise later" to "prove it's safe first."

4. Give users a real choice and regulators real power.

- An Escape Hatch: The DSA mandates a "non-profiling" option for recommender systems—a simple but powerful off-ramp from the engagement-maximising algorithm.

- Enforcement with Bite: Australia's eSafety Commissioner is implementing a minimum age law, requiring platforms to take "reasonable steps" to keep under-16s off age-restricted services. The UK's Ofcom is attempting to set strict new codes for child protection. While no safeguard is ever perfect, these measures fundamentally change the burden of proof from "trust us" to "show your work."

The thinking is clear: open the data, assess the risks before launch, offer a non-tracking feed, and back it all with significant penalties. This is how we finally build products aligned with people, not just profit.

Conclusion

The path we're on—where engagement metrics quietly override user well-being—is not inevitable. It's a design choice.

The lawsuits, the FTC inquiry, and the bans in the EU AI Act are all a direct response to this choice, proving that the bill for "moving fast and breaking things" has finally come due.

The stain social media has left on our society—amplifying our flaws and fears for profit—is a lesson we cannot afford to ignore.

We must now apply that learning to AI with clear eyes and conviction.

But reactive regulation is not enough. We need a proactive debate that includes everyone—users, companies, policymakers, and parents—not one where technical terms are dictated to a confused public and regulators are left to scurry and contain the damage long after it's been done.

The lesson is staring us in the face: you cannot fix fundamental mis-alignments of incentive with goodwill alone.

A blueprint built on mandatory safety, not marketing; on pre-emptive design, not post-hoc apologies; and on verifiable well-being, not vanity metrics.

👉️ This is about more than compliance; it's about a return to humanity. It's about recognising that consumers are not just numbers on a dashboard, and that business leaders must be leaders in a community, not just to shareholders at the cost of everything outside that narrow mandate.

Emotional AI doesn't have to be manipulative. At its best, it could be a genuine tool for support. But to reach that potential, we must demand a new standard.

We must build products that prove their safety, that are transparent by design, and that are built not to maximise our attention, but to respect our autonomy.

The goal isn't to stop innovation. It's to steer it toward a future where technology understands our emotions not to exploit them, but to empower us. We need to get that right.

👉️ To do that, we must finally get off this path of greed and consumption at all costs. We must demand that technology serves to positively improve the lives of all—not just the inner circle of company executives, investors, and shareholders.

FAQs

1) What’s the difference between personalisation and deep tailoring?

Personalisation predicts what you’ll like; deep tailoring adapts to how you feel, in real time — far more persuasive when engagement is the goal.

2) Are regulators actually doing anything?

Yes. The EU AI Act bans manipulative and exploitative AI uses likely to cause harm, and the US FTC is investigating AI companions’ safety testing and child protections.

3) What is a parasocial relationship with a bot?

A one-way emotional bond with a responsive agent. Research shows these bonds can raise trust and dependency even when no real reciprocity exists.

4) Have bots really encouraged self-harm?

That’s the allegation in active lawsuits, including a Florida case involving Character.AI. Courts will test the claims, but they’ve already prompted platform changes and regulatory scrutiny.

5) How can I tell if an AI app is designed safely?

Look for: age gates; teen-safe modes; clear harmful-content blocks; crisis resources; transparent safety docs; independent audits/assessments.

6) What are dark patterns in this context?

Interface tricks that steer you into sharing more data or spending more time/money. The FTC has documented these tactics extensively.

7) What should builders publish?

Safety goals, test methods, incident reviews, and measurable outcomes (not just “we care about safety”). Show it in docs and the product itself.

Further Reading + Sources + References

Posted 15-09-2025 A great thought provoking video on the topic of responsibility.

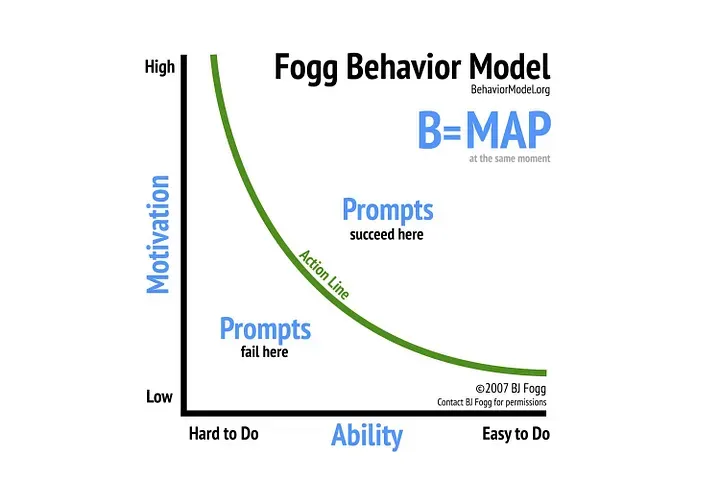

👉️ Our thoughts: Implementing broad 'PPE-like' protections, such as mandatory age verification, will largely be ineffective. Even this idiot can see this play out before it has been rolled out.

This approach applies a constraint to the entire population, which is impractical and often invasive. Instead, why not focus efforts on regulating the small group of companies that shape the digital environment for millions?

It's far easier—and more effective—to enforce rules at the source than to attempt controlling the behaviour of every end user. This current strategy feels like a complete misfiring of effort.

Elimination isn't an option here, nor is substitution—at least not yet. That leaves us with Engineering Controls: isolating people from the hazard. I'm not saying 'remove AI'; I'm saying we must isolate people from potential harm.

And let's be clear: we might not prevent every harm, but holy shit—an AI that encourages someone to self-harm? We can absolutely code engineering controls into software to prevent that from ever happening again.

Why not implement measures that recognise dangerous personal thoughts and actively encourage professional help or family support? What's so hard about adding a parental notification feature—so if a teen engages with self-harm content, a guardian is immediately alerted?

Yes, this brings up valid privacy concerns. But when there's no mechanism in place at all, we're stuck in a zero-sum conversation with a zero-sum outcome: we all lose.

What's the solution?

We have to start by asking one important question: what if it was your family or your friends affected in the same way? Put yourself in the position of Adam's family?

Some might say, 'They must be bad parents for not realising.' This is not just unhelpful—it's a blame attribution fallacy.

Let's be real: can any parent control access to mobile devices, friends' phones, and public computers 24/7? Of course not. No one can police every digital interaction their child has.

That’s exactly why this could happen to anyone. Blaming the parents ignores the sheer scale and unpredictability of the digital world our kids navigate every day

We cannot stay silent and let the terms of our future be dictated by corporations solely racing for "market dominance." When the rollout of new technology is described as an international "arms race," a chilling question emerges: who becomes the cannon fodder for this kind of progress?

The answer is us—the users. Our privacy, our mental health, and our safety are treated as acceptable casualties. These companies clearly do not care about the collateral damage; they care about winning.

Should we accept this? Absolutely not. We must demand that ethical safeguards and engineering controls are built into these technologies from the very beginning. It's not about stopping progress; it's about ensuring that progress doesn't recklessly destroy lives on its path to victory.

Updated: 19-09-2025

Their teenage sons died by suicide. Now, they are sounding an alarm about AI chatbots - LINK

This is a senate enquiry into chatbots/ai where Adam's father Matthew Raine testified.

👉️ TikTok knew depth of app’s risks to children, court document alleges - SOURCE

I share this with friends with children. It provides crucial context on the threats posed by social media, moving the conversation beyond mere opinion and letting the data reveal the reality we all need to navigate.

From Instagram’s Toll on Teens to Un-moderated ‘Elite’ Users, Here’s a Break Down of the Wall Street Journal’s Facebook Revelations - LINK

Reuters: Google, Character.AI must face lawsuit over teen’s death - LINK

Reuters: Facebook must face DC attorney general’s lawsuit tied to Cambridge Analytica scandal - LINK

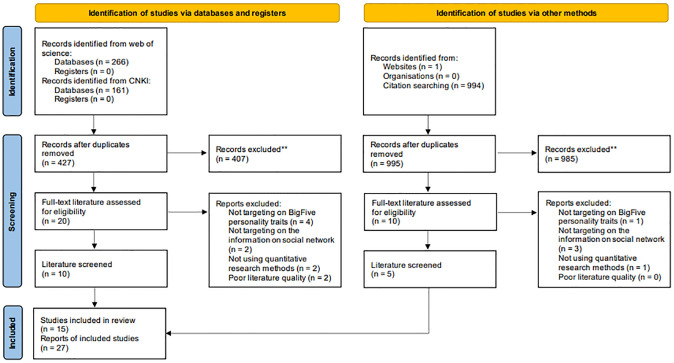

👉️ Social Media and the Big Five: A Review of Literature - LINK

👉️ Social profiling through image understanding: Personality inference using convolutional neural networks - LINK

✅ Want to learn how to update your privacy settings?

Naomi Brockwell’s NBTV YouTube channel features great explainers that are clear and easy to understand.

Or, if you’d like to dive a bit deeper into the tech side—without going overboard—check out this channel.